Over the last two years, Large Language Models (LLMs) have reshaped how businesses think about automation and customer experience. From GPT-style assistants to sophisticated WhatsApp bots, the industry is flooded with bold promises—“human-like conversations,” “instant resolution,” “self-learning bots,” and more.

But between the sweeping hype and the actual results businesses achieve, there lies a wide gap.

This blog breaks down what LLM-powered chatbots can truly deliver today, where they struggle, and how organisations should realistically adopt them for customer engagement.

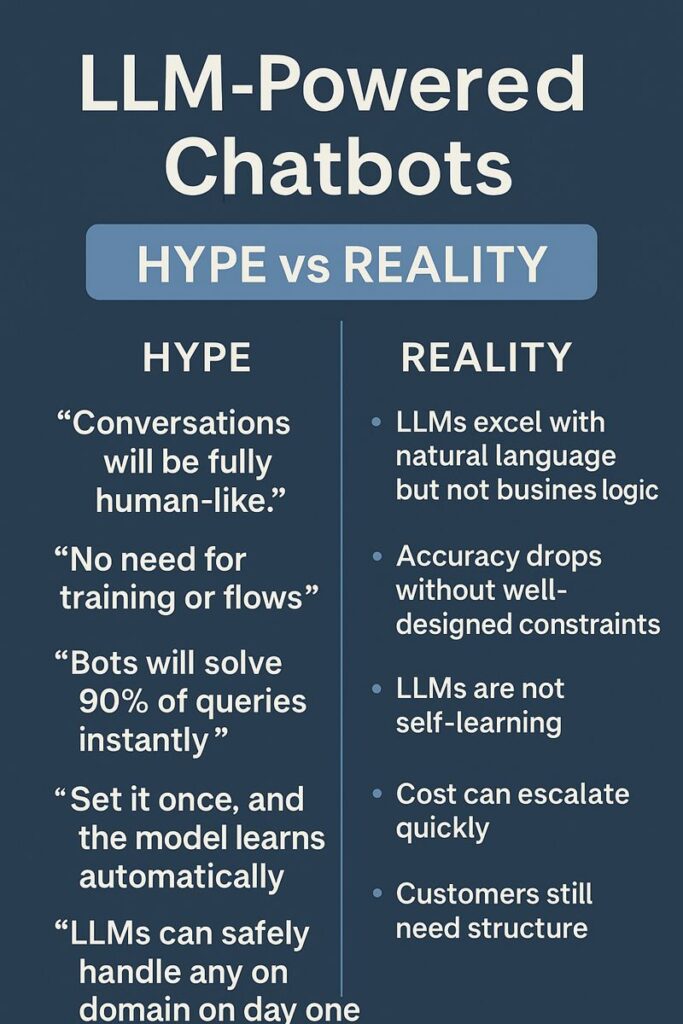

The Hype: What Marketers & Vendors Promise

LLMs have supercharged expectations. The hype usually sounds like this:

1. “Conversations will be fully human-like.”

Marketers showcase bots that understand slang, emotion, and complex context—as if customers are chatting with real people.

2. “No need for training or flows.”

LLMs supposedly eliminate the need for conversation design, structured workflows, FAQs, or scripts.

3. “Bots will solve 90% of queries instantly.”

The dream: near-zero human intervention, blazing-fast replies, and perfect accuracy.

4. “Set it once, and the model learns automatically.”

A myth that LLMs will magically improve just by interacting with customers.

5. “LLMs can safely handle any domain on day one.”

From healthcare to banking to legal queries—vendors often imply LLMs are universally reliable.

Sounds incredible. But is this the real picture today?

Let’s have an honest look.

The Reality: What LLM Chatbots Can Actually Do

LLMs do bring breakthrough capabilities—but within boundaries. Here’s the practical truth:

1. LLMs excel at natural language, but not business logic

They understand queries, rephrase text, summarise documents, classify intents, and handle chit-chat beautifully.

But they don’t know your policies, your pricing, your workflows, or your integrations unless explicitly provided.

For business engagement, LLMs must be combined with structured workflows, APIs, and guardrails.

2. Accuracy drops without well-designed constraints

Left open-ended, LLMs may:

- hallucinate answers

- misinterpret edge cases

- provide outdated or incorrect responses

- sound overly confident even when wrong

This is risky for BFSI, healthcare, travel, and e-commerce.

LLM + rule-based systems = safe and scalable automation.

3. LLMs are not self-learning

They don’t automatically “remember” or “improve” from conversations unless you build a full feedback loop + custom training pipeline.

Most businesses skip this due to cost and compliance.

Ongoing optimisation is a human-driven process, not an automatic one.

4. Cost can escalate quickly

While the per-call cost seems small, real-world usage creates:

- huge token usage

- unexpected spikes during campaign peaks

- higher infrastructure requirements

- safety layer costs

LLMs must be used selectively, not everywhere.

5. Customers still need structure

A purely free-flowing bot may feel magical initially, but customers still want:

- quick buttons

- predictable actions

- guided journeys

- recognisable flows

This is why WhatsApp Flows and structured UX won’t disappear.

Hybrid = best experience.

Where LLM-Powered Chatbots Shine Today

LLMs are transformational when applied to the right problems:

✔ Handling long-tail queries

Bots can answer complex questions that rule-based bots cannot predict.

✔ Intelligent FAQ & knowledge retrieval

They can read your knowledge base and respond conversationally.

✔ Support agent assist

LLM-powered internal copilots boost agent productivity.

✔ Summarising conversations

Great for ticketing workflows and CRM integration.

✔ Nuanced classification & intent detection

They outperform traditional NLP systems significantly.

✔ Personalised communication at scale

For product recommendations, email drafting, WhatsApp responses, etc.

This is where ROI is real and measurable.

Where They Struggle or Should Be Limited

✘ Regulated communications

BFSI, healthcare, insurance require 100% accuracy.

✘ Transactional flows

Order placement, refunds, KYC → better handled by APIs + flows.

✘ Sensitive judgement calls

Legal advice, medical interpretation.

✘ Open-ended emotional support

High risk of inappropriate or non-compliant responses.

LLMs are assistants, not decision-makers.

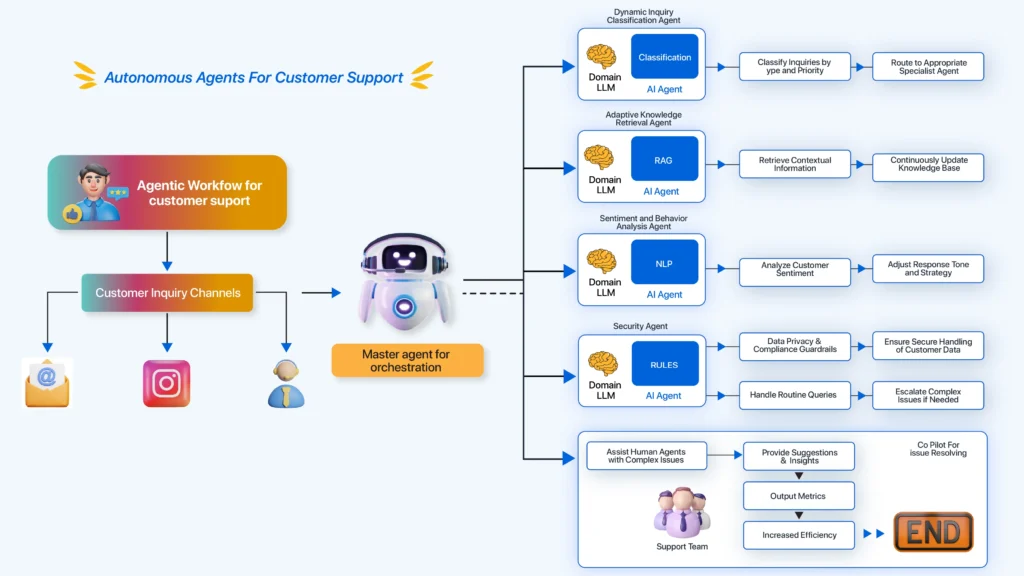

The Ideal Model: Hybrid Chatbots

The most successful businesses today use a Hybrid AI Framework:

1. Rule-based automation

For predictable, high-volume tasks.

2. LLM layer

For complex interpretation, personalisation, and long-tail queries.

3. Human fallback

For high-risk or sensitive scenarios.

4. Guardrails + vector search

To prevent hallucinations and ensure factual responses.

This is the architecture behind most enterprise-grade AI deployments today.

What Businesses Should Truly Expect in 2025

- 50–70% automation, not 100%

- Dramatically improved CSAT for support flows

- Lower operational cost with agent-assist tools

- More personalised customer conversations

- Faster resolution of long-tail queries

- Reduced time-to-launch for new bots

- Increased reliance on knowledge-based chatbots

- Need for continuous monitoring, tuning, and governance

In simple terms:

LLMs don’t replace humans. They amplify them.

Conclusion: LLM Chatbots Are Powerful—but Not Magic

LLMs represent the biggest leap in customer communication since the launch of WhatsApp Business APIs. But success doesn’t come from hype—it comes from thoughtful implementation.

Businesses that win with LLMs are the ones that:

✔ use them where they truly shine

✔ combine them with structured automation

✔ build safe and compliant workflows

✔ provide human fallback

✔ continuously monitor and refine

If you’re looking to integrate LLM-powered chatbots into your customer engagement strategy—whether for WhatsApp, Web, or omnichannel—this is the perfect time to start experimenting intelligently.

Views: 2